Overview of a check

A Check is an encapsulation of a data quality rule along with additional context such as associated notifications, tags, filters and tolerances.

There are two types of Checks in Qualytics: inferred and authored:

Inferredchecks are automatically generated by the Qualytics platform as part of aProfile Operation. Typically, inferred checks will cover 80 - 90% of rules that a user would need.Authoredchecks are manually authored by a user via UI or API. There are many types of checks that can be authored through templates for common checks as well as more complex rules through SQL and UDF (in Scala).

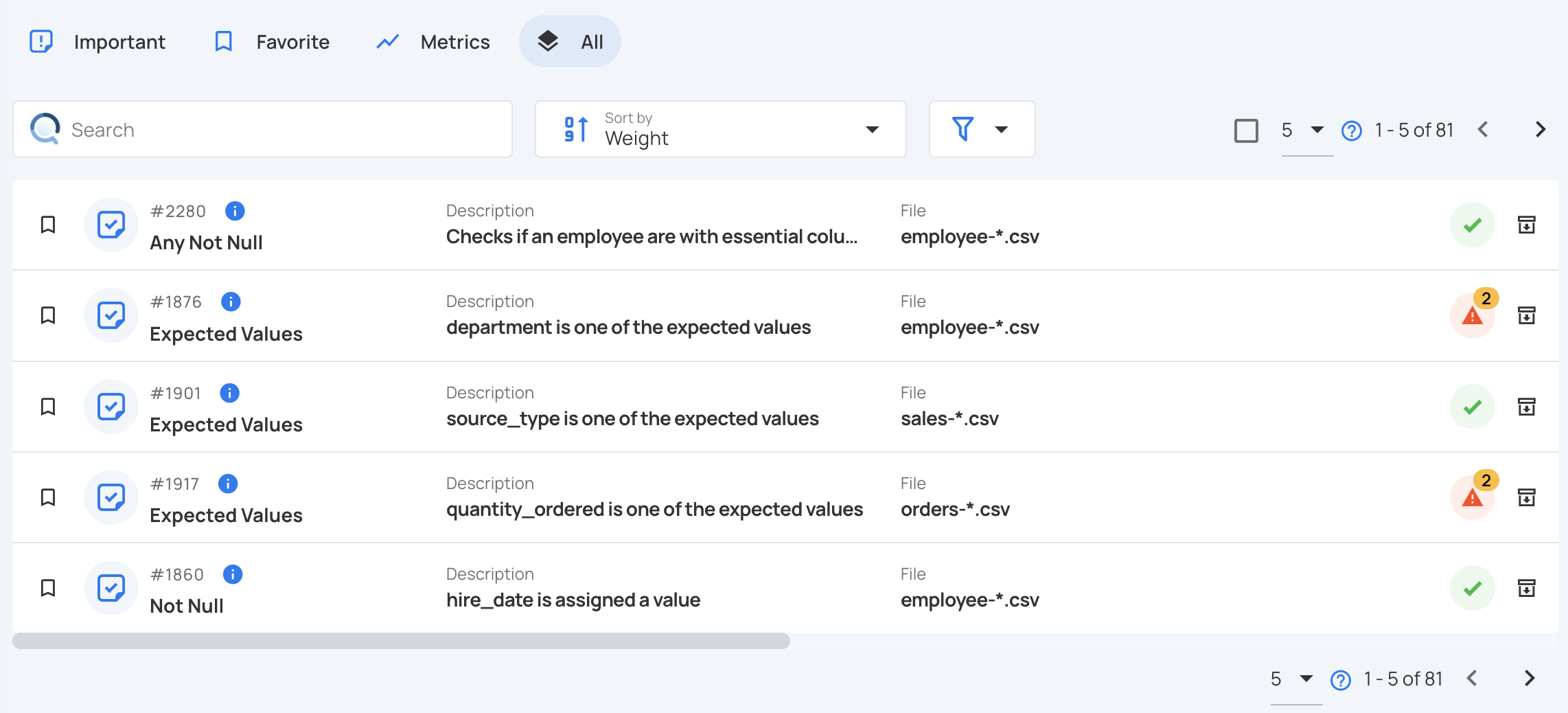

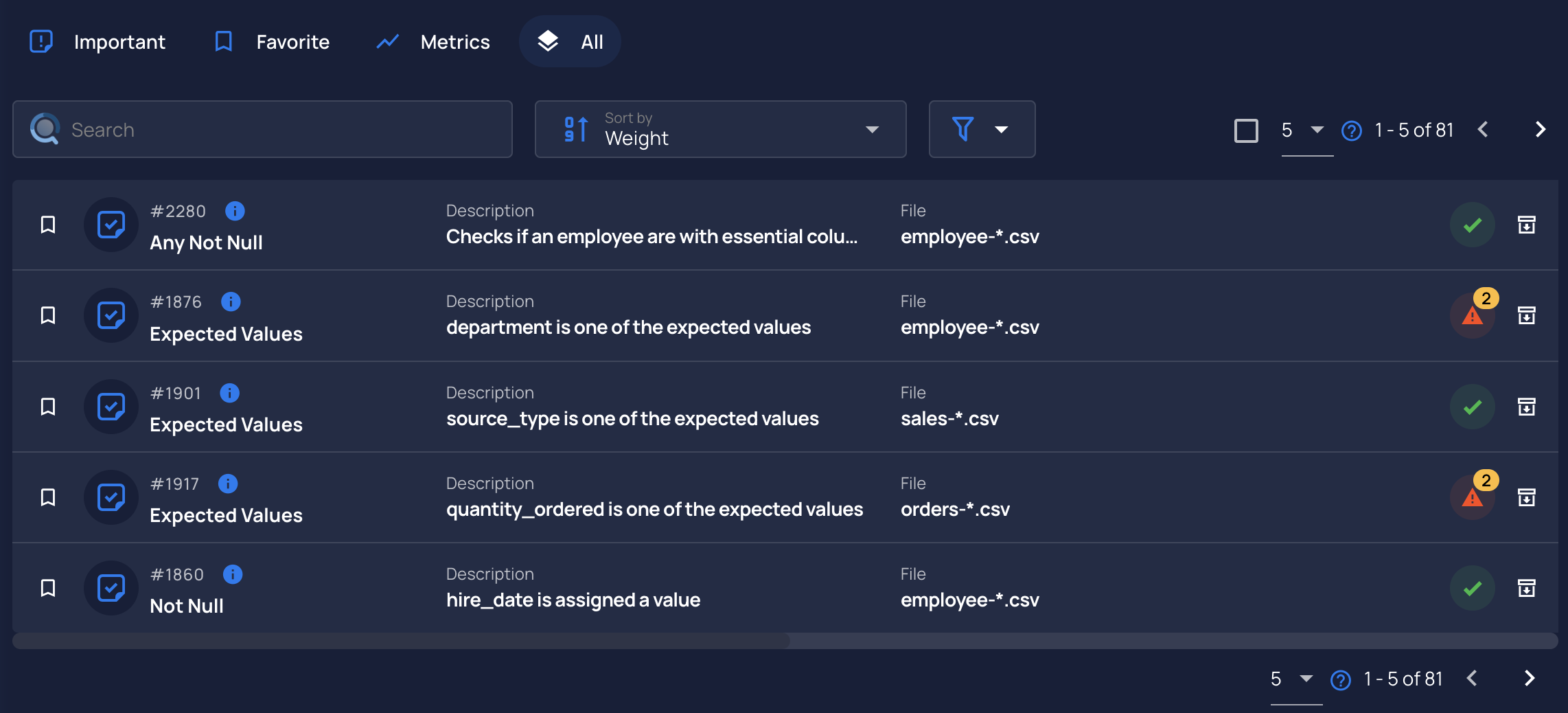

Checks Tab

- Select a

Datastore. - Click on

Checkstab

- You are going to see all checks related to your

Datastore

List of rule types

-

After Date Time: Asserts that the field is a timestamp later than a specific date and time. -

Any Not Null: Asserts that one of the fields must not be null. -

Before DateTime: Asserts that the field is a timestamp earlier than a specific date and time. -

Between: Asserts that values are equal to or between two numbers. -

Between Times: Asserts that values are equal to or between two dates or times. -

Contains Credit Card: Asserts that the values contain a credit card number. -

Contains Email: Asserts that the values contain email addresses. -

Contains Social Security Number: Asserts that the values contain social security numbers. -

Contains Url: Asserts that the values contain valid URLs. -

Data Type: Asserts that the data is of a specific type. -

Distinct: The ratio of the count of distinct values (e.g. [a, a, b] is 2/3). -

Distinct Count: Asserts on the approximate count distinct of the given column. -

Equal To Field: Asserts that this field is equal to another field. -

Exists in: Asserts if the rows of a compared table/field of a specific Datastore exists in the selected table/field. -

Expected Values: Asserts that values are contained within a list of expected values. -

Field Count: Asserts that there must be exactly a specified number of fields. -

Greater Than: Asserts that the field is a number greater than (or equal to) a value. -

Greater Than Field: Asserts that this field is greater than another field. -

Is Credit Card: Asserts that the values are credit card numbers. -

Is Not Replica Of: Asserts that the dataset created by the targeted field(s) is not replicated by the referred field(s). -

Is Replica Of: Asserts that the dataset created by the targeted field(s) is replicated by the referred field(s). -

Less Than: Asserts that the field is a number less than (or equal to) a value. -

Less Than Field: Asserts that this field is less than another field. -

Matches Pattern: Asserts that a field must match a pattern. -

Max Length: Asserts that a string has a maximum length. -

Max Value: Asserts that a field has a maximum value. -

Metric: Records the value of the selected field during each scan operation and asserts that the value is within a specified range (inclusive). -

Min Length: Asserts that a string has a minimum length. -

Min Partition Size: Asserts the minimum number of records that should be loaded from each file or table partition. -

Min Value: Asserts that a field has a minimum value. -

Not Exist In: Asserts that values assigned to this field do not exist as values in another field. -

Not Future: Asserts that the field's value is not in the future. -

Not Negative: Asserts that this is a non-negative number. -

Not Null: Asserts that the field's value is not explicitly set to nothing. -

Positive: Asserts that this is a positive number. -

Predicted By: Asserts that the actual value of a field falls within an expected predicted range. -

Required Fields: Asserts that all of the selected fields must be present in the datastore. -

Required Values: Asserts that all of the defined values must be present at least once within a field. -

Satisfies Expression: Evaluates the given expression (any validSpark SQL) for each record. -

Sum: Asserts that the sum of a field is a specific amount. -

Time Distribution Size: Asserts that the count of records for each interval of a timestamp is between two numbers. -

Unique: Asserts that the field's value is unique. -

User Defined Function: Asserts that the given user-defined function (asScalascript) evaluates to true over the field's value.