Overview of an enrichment datastore

An Enrichment Datastore is a user-managed storage location where the Qualytics platform records and accesses metadata through a set of system-defined tables. It is purpose-built to capture metadata generated by the platform's profiling and scanning operations.

Key Points

-

Metadata Storage: The Enrichment Datastore acts as a dedicated mechanism for writing and retaining metadata that the platform generates. This includes information about anomalies, quality checks, field profiling, and additional details that enrich the source data.

-

Feature Enablement: By using the Enrichment Datastore, the platform unlocks certain features such as the previewing of source records. For instance, when an anomaly is detected, the platform typically previews a limited set of affected records. For a comprehensive view and persistent access, the Enrichment Datastore captures and maintains a complete snapshot of the source records associated with the anomalies.

-

User-Managed Location: While the Qualytics platform handles the generation and processing of metadata, the actual storage is user-managed. This means that the user maintains control over the Enrichment Datastore, deciding where and how this data is stored, adhering to their governance and compliance requirements.

-

Insight and Reporting: Beyond storing metadata, the Enrichment Datastore allows users to derive actionable insights and develop custom reports for a variety of use cases, from compliance tracking to data quality improvement initiatives.

Table Types

The Enrichment Datastore contains several types of tables, each serving a specific purpose in the data enrichment and remediation process. These tables are categorized into:

- Enrichment Tables

- Remediation Tables

- Metadata Tables

Enrichment Tables

When anomalies are detected, the platform writes metadata into two primary enrichment tables:

- <enrichment_prefix>_failed_checks

- <enrichment_prefix>_source_records

- <enrichment_prefix>_scan_operations

_FAILED_CHECKS Table

Acts as an associative entity that consolidates information on failed checks, associating anomalies with their respective quality checks.

Columns

| Name | Data Type | Description |

|---|---|---|

| QUALITY_CHECK_ID | NUMBER | Unique identifier for the quality check. |

| ANOMALY_UUID | STRING | UUID for the anomaly detected. |

| QUALITY_CHECK_MESSAGE | STRING | Message describing the quality check outcome. |

| SUGGESTED_REMEDIATION_FIELD | STRING | Field suggesting remediation. |

| SUGGESTED_REMEDIATION_VALUE | STRING | Suggested value for remediation. |

| SUGGESTED_REMEDIATION_SCORE | FLOAT | Score indicating confidence in remediation. |

| QUALITY_CHECK_RULE_TYPE | STRING | Type of rule applied for quality check. |

| QUALITY_CHECK_TAGS | STRING | Tags associated with the quality check. |

| QUALITY_CHECK_PARAMETERS | STRING | Parameters used for the quality check. |

| QUALITY_CHECK_DESCRIPTION | STRING | Description of the quality check. |

| OPERATION_ID | NUMBER | Identifier for the operation detecting anomaly. |

| DETECTED_TIME | TIMESTAMP | Timestamp when the anomaly was detected. |

| SOURCE_CONTAINER | STRING | Name of the source data container. |

| SOURCE_PARTITION | STRING | Partition of the source data. |

| SOURCE_DATASTORE | STRING | Datastore where the source data resides. |

Info

This table is not characterized by unique ANOMALY_UUID or QUALITY_CHECK_ID values alone. Instead, the combination of ANOMALY_UUID and QUALITY_CHECK_ID serves as a composite key, uniquely identifying each record in the table.

_SOURCE_RECORDS Table

Stores source records in JSON format, primarily to enable the preview source record feature in the Qualytics App.

Columns

| Name | Data Type | Description |

|---|---|---|

| SOURCE_CONTAINER | STRING | Name of the source data container. |

| SOURCE_PARTITION | STRING | Partition of the source data. |

| ANOMALY_UUID | STRING | UUID for the associated anomaly. |

| CONTEXT | STRING | Contextual information for the anomaly. |

| RECORD | STRING | JSON representation of the source record. |

_SCAN_OPERATIONS Table

Captures and stores the results of every scan operation conducted on the Qualytics Platform.

Columns

| Name | Data Type | Description |

|---|---|---|

| OPERATION_ID | NUMBER | Unique identifier for the scan operation. |

| DATASTORE_ID | NUMBER | Identifier for the source datastore associated with the operation. |

| CONTAINER_ID | NUMBER | Identifier for the container associated with the operation. |

| CONTAINER_SCAN_ID | NUMBER | Identifier for the container scan associated with the operation. |

| PARTITION_NAME | STRING | Name of the source partition on which the scan operation is performed. |

| INCREMENTAL | BOOLEAN | Boolean flag indicating whether the scan operation is incremental. |

| RECORDS_PROCESSED | NUMBER | Total number of records processed during the scan operation. |

| ENRICHMENT_SOURCE_RECORD_LIMIT | NUMBER | Maximum number of records written to the enrichment for each anomaly detected. |

| MAX_RECORDS_ANALYZED | NUMBER | Maximum number of records analyzed in the scan operation. |

| ANOMALY_COUNT | NUMBER | Total number of anomalies identified in the scan operation. |

| START_TIME | TIMESTAMP | Timestamp marking the start of the scan operation. |

| END_TIME | TIMESTAMP | Timestamp marking the end of the scan operation. |

| RESULT | STRING | Textual representation of the scan operation's status. |

| MESSAGE | STRING | Detailed message regarding the process of the scan operation. |

Remediation Tables

When anomalies are detected in a container, the platform has the capability to create remediation tables in the Enrichment Datastore. These tables are detailed snapshots of the affected container, capturing the state of the data at the time of anomaly detection. They also include additional columns for metadata and remediation purposes. However, the creation of these tables depends upon the chosen remediation strategy during the scan operation.

Currently, there are three types of remediation strategies:

- None: No remediation tables will be created, regardless of anomaly detection.

- Append: Replicate source containers using an append-first strategy.

- Overwrite: Replicate source containers using an overwrite strategy.

Note

The naming convention for the remediation tables follows the pattern of <enrichment_prefix>_remediation_<container_id>, where <enrichment_prefix> is user-defined during the Enrichment Datastore configuration and <container_name> corresponds to the original source container.

Illustrative Table

_{ENRICHMENT_CONTAINER_PREFIX}_REMEDIATION_{CONTAINER_ID}

This remediation table is an illustrative snapshot of the "Orders" container for reference purposes.

| Name | Data Type | Description |

|---|---|---|

| _QUALYTICS_SOURCE_PARTITION | STRING | The partition from the source data container. |

| ANOMALY_UUID | STRING | Unique identifier of the anomaly. |

| _QUALYTICS_APPEARS_IN | STRING | Indicates whether the record came from the target or reference container in relation to the check definition. |

| ORDERKEY | NUMBER | Unique identifier of the order. |

| CUSTKEY | NUMBER | The customer key related to the order. |

| ORDERSTATUS | CHAR | The status of the order (e.g., 'F' for 'finished'). |

| TOTALPRICE | FLOAT | The total price of the order. |

| ORDERDATE | DATE | The date when the order was placed. |

| ORDERPRIORITY | STRING | Priority of the order (e.g., 'urgent'). |

| CLERK | STRING | The clerk who took the order. |

| SHIPPRIORITY | INTEGER | The priority given to the order for shipping. |

| COMMENT | STRING | Comments related to the order. |

Note

In addition to capturing the original container fields, the platform includes three metadata columns designed to assist in the analysis and remediation process.

- _QUALYTICS_SOURCE_PARTITION

- ANOMALY_UUID

- _QUALYTICS_APPEARS_IN

- This optional column appears based on specific check rule types, such as

Is Replica Of, to provide additional context.

- This optional column appears based on specific check rule types, such as

Metadata Tables

The Qualytics platform enables users to manually export metadata into the enrichment datastore, providing a structured approach to data analysis and management. These metadata tables are structured to reflect the evolving characteristics of data entities, primarily focusing on aspects that are subject to changes.

Currently, the following assets are available for exporting:

- _<enrichment_prefix>_export_anomalies

- _<enrichment_prefix>_export_checks

- _<enrichment_prefix>_export_field_profiles

Note

The strategy used for managing these metadata tables employs a create or replace approach, meaning that the export process will create a new table if one does not exist, or replace it entirely if it does. This means that any previous data will be overwritten.

For more detailed information on exporting metadata, please refer to the export documentation.

_EXPORT_ANOMALIES Table

Contains metadata from anomalies in a distinct normalized format. This table is specifically designed to capture the mutable states of anomalies, emphasizing their status changes.

Columns

| Name | Data Type | Description |

|---|---|---|

| ID | NUMBER | Unique identifier for the anomaly. |

| CREATED | TIMESTAMP | Timestamp of anomaly creation. |

| UUID | UUID | Universal Unique Identifier of the anomaly. |

| TYPE | STRING | Type of the anomaly (e.g., 'shape'). |

| STATUS | STRING | Current status of the anomaly (e.g., 'Active'). |

| GLOBAL_TAGS | STRING | Tags associated globally with the anomaly. |

| CONTAINER_ID | NUMBER | Identifier for the associated container. |

| SOURCE_CONTAINER | STRING | Name of the source container. |

| DATASTORE_ID | NUMBER | Identifier for the associated datastore. |

| SOURCE_DATASTORE | STRING | Name of the source datastore. |

| GENERATED_AT | TIMESTAMP | Timestamp when the export was generated. |

_EXPORT_CHECKS Table

Contains metadata from quality checks.

Columns

| Name | Data Type | Description |

|---|---|---|

| ADDITIONAL_METADATA | STRING | JSON-formatted string containing additional metadata for the check. |

| COVERAGE | FLOAT | Represents the expected tolerance of the rule. |

| CREATED | STRING | Created timestamp of the check. |

| DELETED_AT | STRING | Deleted timestamp of the check. |

| DESCRIPTION | STRING | Description of the check. |

| FIELDS | STRING | Fields involved in the check separated by comma. |

| FILTER | STRING | Criteria used to filter data when asserting the check. |

| GENERATED_AT | STRING | Indicates when the export was generated. |

| GLOBAL_TAGS | STRING | Represents the global tags of the check separated by comma. |

| HAS_PASSED | BOOLEAN | Boolean indicator of whether the check has passed its last assertion . |

| ID | NUMBER | Unique identifier for the check. |

| INFERRED | BOOLEAN | Indicates whether the check was inferred by the platform. |

| IS_NEW | BOOLEAN | Flags if the check is new. |

| LAST_ASSERTED | STRING | Timestamp of the last assertion performed on the check. |

| LAST_EDITOR | STRING | Represents the last editor of the check. |

| LAST_UPDATED | STRING | Represents the last updated timestamp of the check. |

| NUM_CONTAINER_SCANS | NUMBER | Number of containers scanned. |

| PROPERTIES | STRING | Specific properties for the check in a JSON format. |

| RULE_TYPE | STRING | Type of rule applied in the check. |

| WEIGHT | FLOAT | Represents the weight of the check. |

| DATASTORE_ID | NUMBER | Identifier of the datastore used in the check. |

| CONTAINER_ID | NUMBER | Identifier of the container used in the check. |

| TEMPLATE_ID | NUMBER | Identifier of the template id associated tothe check. |

| IS_TEMPLATE | BOOLEAN | Indicates wheter the check is a template or not. |

| SOURCE_CONTAINER | STRING | Name of the container used in the check. |

| SOURCE_DATASTORE | STRING | Name of the datastore used in the check. |

_EXPORT_CHECK_TEMPLATES Table

Contains metadata from check templates.

Columns

| Name | Data Type | Description |

|---|---|---|

| ADDITIONAL_METADATA | STRING | JSON-formatted string containing additional metadata for the check. |

| COVERAGE | FLOAT | Represents the expected tolerance of the rule. |

| CREATED | STRING | Created timestamp of the check. |

| DELETED_AT | STRING | Deleted timestamp of the check. |

| DESCRIPTION | STRING | Description of the check. |

| FIELDS | STRING | Fields involved in the check separated by comma. |

| FILTER | STRING | Criteria used to filter data when asserting the check. |

| GENERATED_AT | STRING | Indicates when the export was generated. |

| GLOBAL_TAGS | STRING | Represents the global tags of the check separated by comma. |

| ID | NUMBER | Unique identifier for the check. |

| IS_NEW | BOOLEAN | Flags if the check is new. |

| IS_TEMPLATE | BOOLEAN | Indicates wheter the check is a template or not. |

| LAST_EDITOR | STRING | Represents the last editor of the check. |

| LAST_UPDATED | STRING | Represents the last updated timestamp of the check. |

| PROPERTIES | STRING | Specific properties for the check in a JSON format. |

| RULE_TYPE | STRING | Type of rule applied in the check. |

| TEMPLATE_CHECKS_COUNT | NUMBER | The count of associated checks to the template. |

| TEMPLATE_LOCKED | BOOLEAN | Indicates wheter the check template is locked or not. |

| WEIGHT | FLOAT | Represents the weight of the check. |

_EXPORT_FIELD_PROFILES Table

Contains metadata from field profiles.

Columns

| Name | Data Type | Description |

|---|---|---|

| APPROXIMATE_DISTINCT_VALUES | FLOAT | Estimated number of distinct values in the field. |

| COMPLETENESS | FLOAT | Ratio of non-null entries to total entries in the field. |

| CONTAINER_ID | NUMBER | Identifier for the container holding the field. |

| SOURCE_CONTAINER | STRING | Name of the container holding the field. |

| CONTAINER_STORE_TYPE | STRING | Storage type of the container. |

| CREATED | STRING | Date when the field profile was created. |

| DATASTORE_ID | NUMBER | Identifier for the datastore containing the field. |

| SOURCE_DATASTORE | STRING | Name of the datastore containing the field. |

| DATASTORE_TYPE | STRING | Type of datastore. |

| ENTROPY | FLOAT | Measure of randomness in the information being processed. |

| FIELD_GLOBAL_TAGS | STRING | Global tags associated with the field. |

| FIELD_ID | NUMBER | Unique identifier for the field. |

| FIELD_NAME | STRING | Name of the field being profiled. |

| FIELD_PROFILE_ID | NUMBER | Identifier for the field profile record. |

| FIELD_QUALITY_SCORE | FLOAT | Score representing the quality of the field. |

| FIELD_TYPE | STRING | Data type of the field. |

| FIELD_WEIGHT | NUMBER | Weight assigned to the field for quality scoring. |

| GENERATED_AT | STRING | Date when the field profile was generated. |

| HISTOGRAM_BUCKETS | STRING | Distribution of data within the field represented as buckets. |

| IS_NOT_NORMAL | BOOLEAN | Indicator of whether the field data distribution is not normal. |

| KLL | STRING | Sketch summary of the field data distribution. |

| KURTOSIS | FLOAT | Measure of the tailedness of the probability distribution. |

| MAX | FLOAT | Maximum value found in the field. |

| MAX_LENGTH | FLOAT | Maximum length of string entries in the field. |

| MEAN | FLOAT | Average value of the field's data. |

| MEDIAN | FLOAT | Middle value in the field's data distribution. |

| MIN | FLOAT | Minimum value found in the field. |

| MIN_LENGTH | FLOAT | Minimum length of string entries in the field. |

| NAME | STRING | Descriptive name of the field. |

| Q1 | FLOAT | First quartile in the field's data distribution. |

| Q3 | FLOAT | Third quartile in the field's data distribution. |

| SKEWNESS | FLOAT | Measure of the asymmetry of the probability distribution. |

| STD_DEV | FLOAT | Standard deviation of the field's data. |

| SUM | FLOAT | Sum of all numerical values in the field. |

| TYPE_DECLARED | BOOLEAN | Indicator of whether the field type is explicitly declared. |

| UNIQUE_DISTINCT_RATIO | FLOAT | Ratio of unique distinct values to the total distinct values. |

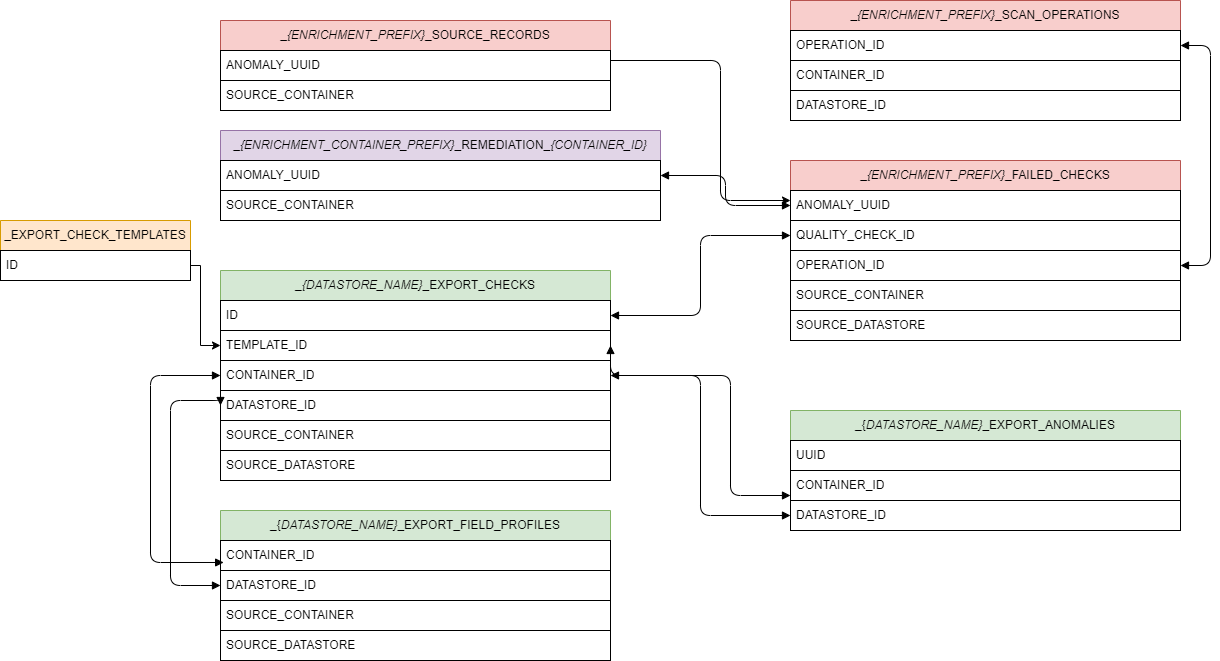

Diagram

The diagram below provides a visual representation of the associations between various tables in the Enrichment Datastore. It illustrates how tables can be joined to track and analyze data across different processes.

Handling JSON and string splitting

SELECT

PARSE_JSON(ADDITIONAL_METADATA):metadata_1::string AS Metadata1_Key1,

PARSE_JSON(ADDITIONAL_METADATA):metadata_2::string AS Metadata2_Key1,

PARSE_JSON(ADDITIONAL_METADATA):metadata_3::string AS Metadata3_Key1,

-- Add more lines as needed up to MetadataN

CONTAINER_ID,

COVERAGE,

CREATED,

DATASTORE_ID,

DELETED_AT,

DESCRIPTION,

SPLIT_PART(FIELDS, ',', 1) AS Field1,

SPLIT_PART(FIELDS, ',', 2) AS Field2,

-- Add more lines as needed up to FieldN

FILTER,

GENERATED_AT,

SPLIT_PART(GLOBAL_TAGS, ',', 1) AS Tag1,

SPLIT_PART(GLOBAL_TAGS, ',', 2) AS Tag2,

-- Add more lines as needed up to TagN

HAS_PASSED,

ID,

INFERRED,

IS_NEW,

IS_TEMPLATE,

LAST_ASSERTED,

LAST_EDITOR,

LAST_UPDATED,

NUM_CONTAINER_SCANS,

PARSE_JSON(PROPERTIES):allow_other_fields::string AS Property_AllowOtherFields,

PARSE_JSON(PROPERTIES):assertion::string AS Property_Assertion,

PARSE_JSON(PROPERTIES):comparison::string AS Property_Comparison,

PARSE_JSON(PROPERTIES):datetime_::string AS Property_Datetime,

-- Add more lines as needed up to Property

RULE_TYPE,

SOURCE_CONTAINER,

SOURCE_DATASTORE,

TEMPLATE_ID,

WEIGHT

FROM "_EXPORT_CHECKS";

SELECT

(ADDITIONAL_METADATA::json ->> 'metadata_1') AS Metadata1_Key1,

(ADDITIONAL_METADATA::json ->> 'metadata_2') AS Metadata2_Key1,

(ADDITIONAL_METADATA::json ->> 'metadata_3') AS Metadata3_Key1,

-- Add more lines as needed up to MetadataN

CONTAINER_ID,

COVERAGE,

CREATED,

DATASTORE_ID,

DELETED_AT,

DESCRIPTION,

(string_to_array(FIELDS, ','))[1] AS Field1,

(string_to_array(FIELDS, ','))[2] AS Field2,

-- Add more lines as needed up to FieldN

FILTER,

GENERATED_AT,

(string_to_array(GLOBAL_TAGS, ','))[1] AS Tag1,

(string_to_array(GLOBAL_TAGS, ','))[2] AS Tag2,

-- Add more lines as needed up to TagN

HAS_PASSED,

ID,

INFERRED,

IS_NEW,

IS_TEMPLATE,

LAST_ASSERTED,

LAST_EDITOR,

LAST_UPDATED,

NUM_CONTAINER_SCANS,

(PROPERTIES::json ->> 'allow_other_fields') AS Property_AllowOtherFields,

(PROPERTIES::json ->> 'assertion') AS Property_Assertion,

(PROPERTIES::json ->> 'comparison') AS Property_Comparison,

(PROPERTIES::json ->> 'datetime_') AS Property_Datetime,

-- Add more lines as needed up to PropertyN

RULE_TYPE,

SOURCE_CONTAINER,

SOURCE_DATASTORE,

TEMPLATE_ID,

WEIGHT

FROM "_EXPORT_CHECKS";

SELECT

(ADDITIONAL_METADATA->>'$.metadata_1') AS Metadata1_Key1,

(ADDITIONAL_METADATA->>'$.metadata_2') AS Metadata2_Key1,

(ADDITIONAL_METADATA->>'$.metadata_3') AS Metadata3_Key1,

-- Add more lines as needed up to MetadataN

CONTAINER_ID,

COVERAGE,

CREATED,

DATASTORE_ID,

DELETED_AT,

DESCRIPTION,

SUBSTRING_INDEX(FIELDS, ',', 1) AS Field1,

-- Add more lines as needed up to FieldN

SUBSTRING_INDEX(GLOBAL_TAGS, ',', 1) AS Tag1,

-- Add more lines as needed up to TagN

HAS_PASSED,

ID,

INFERRED,

IS_NEW,

IS_TEMPLATE,

LAST_ASSERTED,

LAST_EDITOR,

LAST_UPDATED,

NUM_CONTAINER_SCANS,

(PROPERTIES->>'$.allow_other_fields') AS Property_AllowOtherFields,

(PROPERTIES->>'$.assertion') AS Property_Assertion,

(PROPERTIES->>'$.comparison') AS Property_Comparison,

(PROPERTIES->>'$.datetime_') AS Property_Datetime,

-- Add more lines as needed up to PropertyN

RULE_TYPE,

SOURCE_CONTAINER,

SOURCE_DATASTORE,

TEMPLATE_ID,

WEIGHT

FROM "_EXPORT_CHECKS";

Usage Notes

- Both metadata tables and remediation tables, are designed to be ephemeral and thus are recommended to be used as temporary datasets. Users are advised to move this data to a more permanent dataset for long-term storage and reporting.

- The anomaly UUID in the remediation tables acts as a link to the detailed data in the _anomaly enrichment table. This connection not only shows the number of failed checks but also provides insight into each one, such as the nature of the issue, the type of rule violated, and associated check tags. Additionally, when available, suggested remediation actions, including suggested field modifications and values, are presented alongside a score indicating the suggested action's potential effectiveness. This information helps users to better understand the specifics of each anomaly related to the remediation tables.

- The Qualytics platform is configured to capture and write a maximum of 10 rows of data per anomaly by default for both the _source_records enrichment table and the remediation tables. To adjust this limit, users can utilize the

enrichment_source_record_limitparameter within the Scan Operation settings. This parameter accepts a minimum value of 10 but allows the specification of a higher limit, up to an unrestricted number of rows per anomaly. It is important to note that if an anomaly is associated with fewer than 10 records, the platform will only write the actual number of records where the anomaly was detected.